Hi, I am Cap Huu Anh Tri

Data Engineer

I am a fourth-year University of Information Technology (UIT), VNU-HCM student. I'm passionate about working with data and am currently seeking a Data Engineer position.

More About MeMessenger

capp.2003Github

caphatriAbout Me

Hi, I'm Cap Huu Anh Tri

As a fourth-year Computer Science student with a solid foundation in Data Engineering and Big Data technologies, I am eager to secure an internship where I can apply my skills. Though lacking practical experience, I am hardworking, willing to learn, and dedicated to contributing effectively to the team.

Birthday

October 7, 2003Address

Ho Chi Minh CityObjective

Short - term:

Secure an internship as a Data Engineer to gain hands-on experience and apply my knowledge to real-world projects

Long - term:

Develop into a proficient Data Engineer, mastering advanced tools and contributing to impactful data-driven initiatives.

My Skills

Programming Languages

Database Management

Big Data

Python

90%SQL

80%JavaScript

50%MySQL

80%PostgreSQL

50%Apache Hadoop

50%PySpark

50%Kafka

70%Apache Airflow

50%Other Skills

Visualization

Use Apache Superset and Power BI to Visualization

from different

source like Microsoft Excel, SQL sever.

Microsoft Excel

Use Microsoft Excel competently, specially Pivot Table and Power Querry .

Web Scraping

Use BeaufiulSoup and

Selenium to get data from different source .

Docker

Use Docker to create, deploy, and manage containerized applications.

Machine Learning

Linear Regression, Logistic Regression, Naive Bayes, SVM, XGBoost, K-Mean, PCA.

Report

Use Overleaf to create

well-structured and professional reports.

Qualification

Experience

Updating ...

Education

University of Information Technology (UIT), VNU-HCM

Computer Science

GPA: 8.07/10 (3.23/4.0)

2021 - 2024 (Expected)Project

Automated ETL and Visualization of Premier League Match Results

Automated ETL and Visualization of Premier League Match Results.

Utilize ETL to extract match results for analysis and visualization.

- Web Srapy: Using BeautifulSoup to get data from EPL source, store in MySQL as datalake.

- Automated ETL Pipeline: Using Apache Airflow to automate the process of collecting data into MySQL, extracting it from MySQL, transforming into a usable format, and loading into a PostgreSQL database. After that, using Smtplib to email about results.

- Data Visualization: Leveraging Apache Superset to create interactive dashboards and visualizations for analyzing Premier League match results.

- Docker Compose Setup: Streamlines deployment and management of services including Apache Airflow, PostgreSQL, Redis, MySQL, and Apache Superset.

- Technologies used: Python, BeautifulSoup, MySQL, Apache Airflow, Smtplib, PostgreSQL, Docker. View Detail

Real time Anomaly Detection In Web Server

Real time Anomaly Detection In Web Server

Real-time detection and visualization of anomalies in web server logs.

- Real-time Data Ingestion: Stream web server logs from MySQL using Apache Kafka.

- Efficient Storage: Use Apache Pinot for storing and querying time series data.

- Anomaly Detection: Implement a machine learning model (Autoencoder with Regression Model) to predict anomalies.

- Interactive Visualization: Display results and insights using Streamlit.

- Docker Compose Setup: Streamlines deployment and management of services including Apache Kafka, Apache Pinot, Streamlit, and MySQL.

- Technologies used: Python, MySQL, Apache Kafka, Apache Pinot, Streamlit, Docker View Detail

Booking medicine Web application project

Booking medicine Web application project

Create an online prescription system

- Web App Design: Design admin and user interfaces for online prescription booking .

- UI Development: Build interfaces using ReactJS for a modern user experience.

- Database Setup: Establish a MySQL database to store prescription data efficiently.

- Backend Implementation: Develop backend functionality using the Express framework and RESTful APIs

- Technologies used: JavaScripts, MySQL, ReactJS, NodeJS, RESTful APIs, Express framework View Detail

Recommended Topic Hashtags

Recommended Topic Hashtags

Create an automated labeling system for Facebook posts (about ML and DS)

- Data Collection: Utilize Selenium to crawl Facebook posts data .

- Data Preprocessing and Labeling: Preprocess the data and assign labels to each post based on predefined hashtags using Pandas.

- Model Training: Train Machine Learning models using TF-IDF and Bag of Words . Evaluate and select the best-performing model.

- Demo: Create a user-friendly interface with Streamlit that can automated labeling Facebook post

- Technologies used: Python, Machine Learning, Streamlit, Selenium, Sklearn View Detail

App for tecnology & services

Lorem ipsum, dolor sit amet consectetur adipisicing elit. Earum impedit voluptatibus minima.

- Created - 4 dec 2020

- Technologies - html css

- Role - frontend

- View - www.domain.com

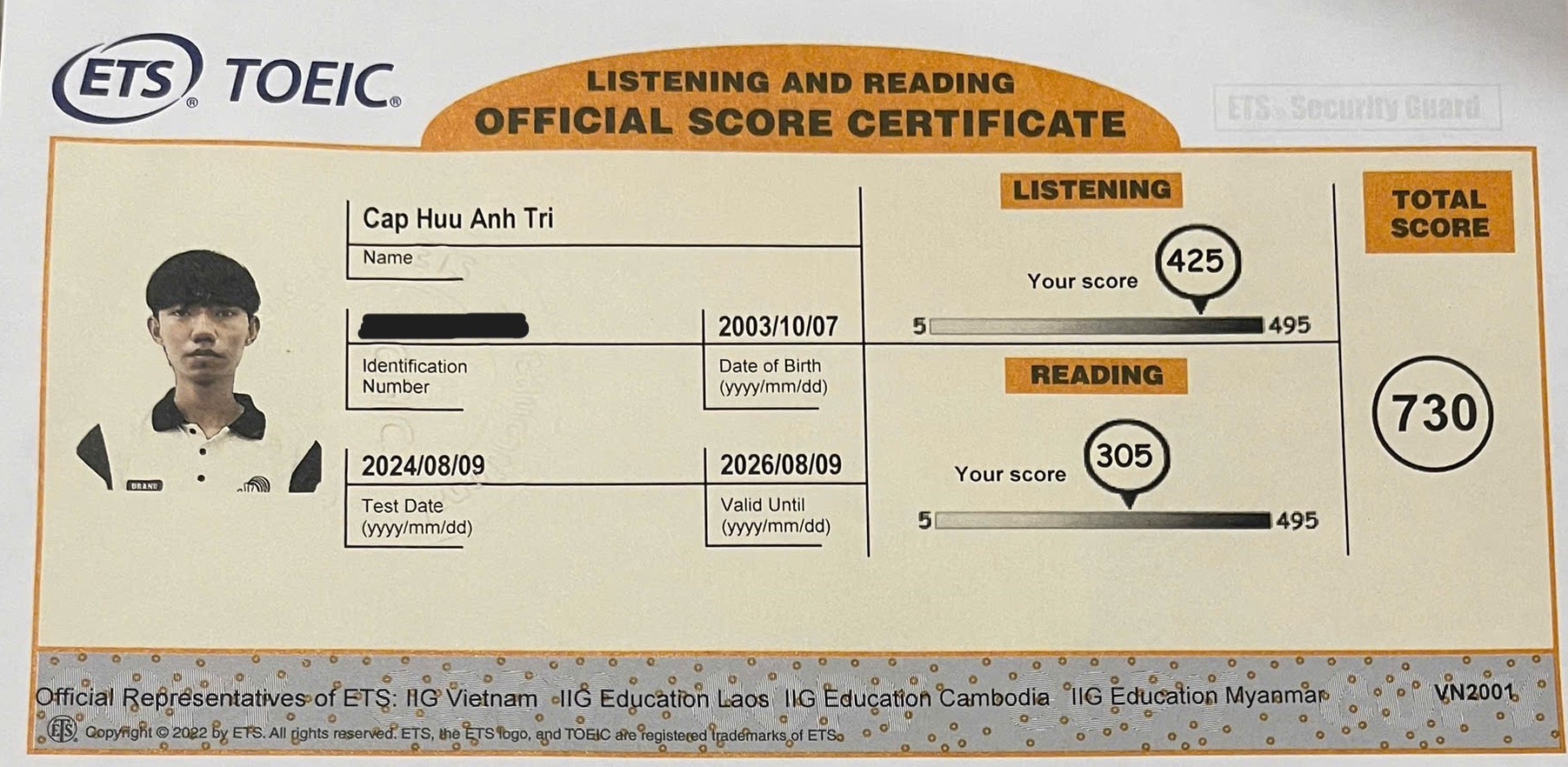

Achievements

TOEIC Certificate by IIG VietNam